DSC #9: The Ghost in Anouk’s Laptop#

by Anouk Lang and Quinn Dombrowski with Annie K. Lamar

February 17, 2021

https://doi.org/10.25740/ys319vz9576

https://doi.org/10.25740/ys319vz9576

February 2020#

Anouk#

February 2020. It was about as far as it was possible to be from the sunny nostalgia of kids-playing-out in 1980s Connecticut. Storms were raging up and down the British Isles, some unsettling news about a virus was just beginning to rear its head, the UK had initiated the process to leave the EU and a significant part of the British university sector, including my own institution, was on strike. But the Data-Sitters Club had just been nominated for the Best Use of DH for Fun in the annual Digital Humanities Awards and if ever there was a time when the respite of data-play was needed to drown out the literal and figurative storms in the real world, it was now. I came home from a very cold stint on the picket line, thawed out my fingers, opened up my laptop and thought: if the world’s going to end, what better thing to do than feed our Baby-Sitters Club corpus to GPT-2 to see what larfs it might deliver?

GPT-2 (short for Generative Pre-trained Transformer) is a large-scale model of language which, in the words of its creators at OpenAI, produces “conditional synthetic text samples of unprecedented quality, where we prime the model with an input and have it generate a lengthy continuation.” In functional terms, it takes what it knows about how language behaves, at least according to a dataset of 8 million (mostly English) web pages considered to be useful by Reddit users, translates the relationships between words and phrases in that 8-million page corpus into a list of numbers, and then, if given a prompt to start it off, will automatically generate text that follows on from that prompt using what it has learnt from the larger corpus. OpenAI opted not to release the full GPT-2 model over concerns that it could be used maliciously, as it did such a good job of imitating human-generated text, so they released a smaller version of the model for everyone other than themselves to use. And use it they did: the months since GPT-2’s release have seen a steady increase in the number of people experimenting with it, not just from computer science but also from the humanities and the cultural industries.

Some of this activity is due to Talk To Transformer, a handy web-based GUI requiring no technical expertise. Until recently, Talk To Transformer offered users the opportunity to divert themselves by typing in a few words or sentences, on the basis of which it would then generate chunks of text somewhere on the continuum from amusing incongruity to uncanny likeness. Janelle Shane used it, along with other neural networks, to compile a collection of AI-generated texts on her AI Weirdness website, the funniest of which suggest that the process works best – at least for the purposes of comedy – when the prompts come from identifiable genres that the model can, so to speak, recognize and reproduce, such as names of cookies, tweets from WeRateDogs, or Christmas carols (for extra laughs, listen to what happened when a voice actor recorded one of the creepier carols). Another of my favourite GPT-2 toys is AI Dungeon, a text-based fantasy game which brings the “yes, and” of improv to bear on the genre of the Choose Your Own Adventure story, creating an interactive adventure game many orders of magnitude better than its print culture predecessor. Here it is seamlessly incorporating instructions about installing software packages into a fantasy narrative. Or, if you want to run something on your own machine, try the packages Robin Sloan put together to generate snippets of sci-fi.

So, like many DH folks, I’d had a play with, and a good chortle at, automatic text generators done with neural networks. For this more extended foray into GPT-2 using our BSC corpus, I decided to use an off-the-shelf application in beta. While I try wherever possible to use open-source code and freely available software, this can involve a serious investment of time in learning new tools, and like I said: mid-semester, strike, and the seismic rumblings of what Covid-19 would mean for teaching and assessment were beginning to shake the ground underfoot. An out-of-the-box application offered a way to see whether the results would be worth the time needed to figure out how to work with the model in a programming language. I fed our BSC corpus into the application, waited a few hours, and then watched as my screen began to fill up with neural-net-generated Baby-Sitters’ Club fanfiction.

“Okay, you guys. What kind of dad does he have?”

“Yes,” said Claudia. “I think he’s outgoing. A lot of things. I think that’s cool, just because I think it’s a good family and he doesn’t have much in common.”

“What is that?” I asked.

“He loves it. He loves going to his house and I just think about it, like the teachers come and help out.”

“Oh. I love him.”

I didn’t hear the answer. I was excited about working out next to him.

One of the interesting things to observe in neural-net generated text is the interaction between the thematic preoccupations and stylistic tics of the two datasets on which the model has been trained (in this case, GPT-2’s training data selected according to its popularity with Reddit users, and the corpus of BSC books). The AI Dungeon example which incorporates the tedious steps of software installation into its fantasy narrative is one innocuous example of this kind of genre-mashing. Janelle Shane supplies another: when she fed Harry Potter fanfiction to a neural network that had previously been trained on recipes, she found that it had trouble keeping on track: hostile exchanges between Harry and Snape would tend to devolve into conversations about recipes. Even in the wholesome family-and-friends-oriented chunk of pseudo-BSC prose above, the murky adult world of the twenty-first century internet is beginning to seep through. I pulled the handle of my text generation slot machine and tried again.

I don’t like his dad. I like him, too. I don’t like what he thinks of me. Not sure what he thinks of me. Not sure what he thinks. Not sure what I think of him. Not sure what his parents want me to know. Not sure what he wants.

If you are trying to distract yourself from the apocalypse, you’ve got to be pretty happy with a neural net that, trained on language from the era of the internet and given BSC texts to chew on, presents you with prose that sounds like Gertrude Stein if she was a boy-crazy teenager. (And also, I call dibs on training a neural net on Stein’s collected works, if Tanya Clement or Natalia Cecire don’t beat me to it.) This scrap of Steinese was not just pleasingly absurd but also curiously redolent of what it is like to be a teenager: anxious not only about what other people think of you, but about what other people think you think of them, and what the people connected to them think that you think of other people, and so on. While I wouldn’t characterize this as revealing the latent structures of teen-speak, fictionally articulated inner monologues, or indeed anything else, it’s hard to shake the feeling that it’s nonetheless capturing something.

I’ll also say that though I’m sceptical about the inside-baseball character of “explainable AI” as it currently stands, I’m confident that scholars of literature, narrative and language have something to add to those conversations, and that there is something to be gained from attempts to identify the textual features a neural network is picking up on, and the ways it is recombining and conjoining those features by asking people who know something about the way texts work. The literary equivalent of being able to identify the node in an image classifier that is looking out for pointy ears in pictures of cats, perhaps. Except, where identifying pointy ears as a salient feature for classifying pictures of cats is not illuminating for photography, art history or veterinary science, it remains a tantalising possibility that literary studies might learn something non-trivial about texts generated by a neural net.

One of the things that neural nets have to “learn” when producing text longer than aphorisms is the capacity to keep track of what has previously occurred. This can range from the simple – knowing that a parenthesis, once opened, must be closed – to the more complex. Our BSC model still had, evidently, some way to go in this direction.

“I’ll call Claudia’s room,” said Claudia. “Don’t you?”

“Yeah.” Claudia said.

Claudia smiled at Claudia. “I’m going to call my parents tomorrow. They’ll be all right now.

“But, don’t worry about it,” said Claudia.

“Oh, it’s okay,” she said. “Okay. I’ll call Claudia.

“Let’s leave!” shouted Claudia.

“Don’t you call Claudia?” said Claudia, as she grabbed her arm.

“I’m not!” she said. “I’m tired. I don’t want to talk to Claudia anymore.”

“Call Claudia. Come, Claud.”

“Come, and tell me about the meetings.”

Claudia was lying in bed. She was shaking her head. “My mind is on you, too. I’m glad you won’t be here. You’re the right one, too. You don’t belong to the BSC.”

Claudia didn’t say anything. She nodded.

While a story peopled almost entirely by Claudias— who talk to each other, grab each other’s arms, and hang out in each others’ bedrooms— has its charms, it shows the neural network to still be at the point of blissful ignorance about the need to differentiate characters in a conversation. Not a feature of a narrative that any class or textbook on narrative will ever bother to mention, but a convention that, once revealed through its rupture, presents itself as non-negotiable: even Joyce’s, B.S. Johnson’s and, yes, Stein’s characters remain stable and distinguishable from others in conversational exchange. And while the extract is hall-of-mirrors ridiculous, it also feels as if it captures something of the intense sociability of the BSC texts, where there is an enormous amount of talking with, emoting at, and being in close physical proximity to, one’s friends.

“We had some great parents. Mom and Dad had kids. Mom and Dad had our own little sister Stacey, who’s two. She did a lot of big things and stuff like that.”

“What do you think, Stacey?” asked Claudia, shaking her head.

“Well, it’s important to remember that we’re not living in an alternate world. You know, it’s hard.”

“But it’s a place where we can see. And we still live in an alternate world, so that you can see where you’re born.”

“That’s great. Right.”

“Or maybe, okay?” asked Stacey.

“It’s hard,” I added. “Just not when you need to.”

“Yeah.”

“That’s right,” agreed Claudia.

“Yeah. Just not when you need to. You don’t know that what you’re born is on the outside, you know.”

“Sure, it’s not that easy. Not when you need to. But it’s hard to have at least one person or someone who can come and have you with you. That’s also why you have to be best friends.”

“Well, I think you can just do a little thing,” agreed Claudia.

By this point, a little further on in its training, the neural net has advanced to the point where it understands that conversations need to occur between different people. And, while the conversational swerve from friends and family to existential anxieties and back again isn’t so typical of the books, the earnest verbal affirmations of the BSC members to one another are. There’s lots of agreeing with and echoing each others’ phrases, even as the characters happily contradict each other about the surprisingly metafictional question of whether they are living in an alternate world. (Does the term ‘alternate world’ even appear in the original corpus, I wondered? Reader, I checked: it does not. My guess is that here the model was reflecting parts of its training data – fanfiction, to which the notion of alternate worlds is central – that most resembled the BSC novels, and used the structures and phrases it found there.)

As the training went on, so the conversations between characters began to get more abstruse:

“Well, I know that the way I feel about myself, the way I feel about myself. There’s something good about myself, you know. I’m different than everyone else. Like you.”

“Not that. Not anything like that. It’s just not the way I feel. I don’t care about how I feel about myself, or what I’m trying to do.”

“Or what’s in your heart?” I asked Claudia.

“The way you feel about myself is that I always feel better,” I reminded her.

“That way, I feel better. And I feel better when I look at you in a different way. You think of myself differently. And that’s why I know what I am.”

“You know what I’m trying to do? Maybe I am just trying to be bigger, too. But I don’t have that kind of world. It’s just you and I are people. Like you. When we didn’t have one person who was older than me. Or something. But it’s your own destiny. And what I’ve done. We see that I have a lot of self-control. I guess I think that’s why I am stronger than you. I feel better now.”

“How about you?” I asked.

“I like you a lot, and I don’t see it. But I know that in your life you’re better than me. And I really believe you can’t be anybody.”

“But you never feel worse. Maybe you’re better than you really are, and what else are you trying to do, too?”

“I don’t care.” I looked around. I wasn’t sure what I was trying to do, but I guess I could tell. If I had thought of it and tried to solve it, I could have made it easier.

This extract reads as an almost parodic depiction of self-obsessed teenagers trying to articulate who they are without having the vocabulary to do so. At the same time, though, it captures something of the intensity and almost romantic dimension of those all-important teenage friendships, where talking for hours to friends (old-school style on a landline telephone, if you were a teen in the 1980s or 90s) was a crucial part of working out who you were, what your place in the world was, and how you might navigate your relationships with others. The repeated circling-back to particular topics – as a neural net sometimes does – also contributed to making the extract reminiscent of those long and luxuriantly angsty conversations. I wondered to what extent the neural net’s generation of the pronouns I and you according to their frequency in the BSC corpus might be contributing to the success of its pastiche, and which other features of language the neural net might be attending to as its learning went on.

After it got stuck in a loop where it began to generate streams of repeated terms, I gave the neural net a new prompt with the opening from our first DSC book, Quinn’s Great Idea, and was soon rewarded with another example of teen-Steinese (“I’m, of course, the only person I can describe, because I’ve come to such a great deal of difference in the way I am”). Obligingly, the neural net then got onto the topic of clothes and how they are used to telegraph identity in the BSC:

Claudia looks terrific, but is definitely not as quick and athletically as Claudia does. She dresses as a brat. She looks absolutely gorgeous, and looks glamorous. She wears perfect outfits, though.

Kristy is the one who’s more casual. For example, Kristy dresses all the way to Stoneybrook. I guess she looked a little sketchy, but Claudia is pretty casual. I think it’s been a good thing she’s more professional lately. I’ll admit, I’m almost almost like Kristy. But I am actually my best friend, and Claudia is sort of like Kristy. She goes for things that don’t fit the stereotype of young, shy, shy people. She’s a strict style person and I don’t see why I wouldn’t like to be an associate member. My roommate, Stacey, is an associate member of the BSC.

The BSC — I mean, although I’m a member of my club — was pretty much a complete mess. The conference was very organized, but the BSC was terrific. I decided to go out with Kristy.

It took about two days to complete the first meeting, so I told her about the problems. I was wearing my Mom’s jeans. But I think it was a bust out of something Claudia has.

What was striking about this was not so much the descriptions of clothing, but rather the way those descriptions were brought together with descriptions of who characters are and who they are trying to be. Though its referential cohesion leaves a fair bit to be desired, the extract also captures the way that the books introduce the characters in terms of their roles (members of the club, associate member) while simultaneously discussing their clothes and their appearance, most obviously in chapter 2 but also throughout the books.

As the training continued, the characterization felt like it was beginning to stabilize: the neural net was now able to keep track of three members of a conversation, while continuing to do a fair job of echoing the tone of the books:

“Okay, what happened?” I asked.

“No,” Kristy answered. “Let’s move on. You have to figure out a way to make up what you want to do.”

“Okay.” I was pretty sure Stacey might have asked. It’s not as if I’d like to leave Kristy alone, but I hoped she wouldn’t make a big deal out of it.

“Okay, it’s not exactly a great idea,” Kristy began.

“Well, I don’t know, but I’m not going to throw it out. I can find out how to make up my mind about who I want to be on the next page.”

“Yes,” Kristy said. “A lot of people are going to like to draw, but you need to figure out how to use your imagination.”

Kristy’s bossiness is an aspect of her character that the BSC books like to draw attention to (the term kristy falls in the top ten most frequent collocates for bossy and bossiness within a 5 to 10-word window either side). Here, though, the text doesn’t use the term but instead telegraphs it through the dialogue, while simultaneously conveying the uncertainty of the unnamed narrator. The extract also brings out the underlying tension between Kristy and the narrator, and – another feature of teenagehood – how one often feels as if the more articulate, more socially powerful people in one’s circle are getting everything their own way, while you stand on the sidelines self-censoring and suppressing your own feelings and desires.

Neural-net-generated-Kristy, meanwhile, started getting seriously bossy:

Kristy nodded. “Look at the rules for the group, all the members. I found the idea of good ideas. And the reason I wrote these are just the ones I love.”

“Right,” I said. “I guess I’ll keep up with you.”

“Right,” said Kristy. “Okay, now let me have an idea for you, if you ask. First, to do homework on how you should be aware of each of the decisions you have made. Then, if you’re curious about how to find new ideas, I’ll pick up what I’d like to teach you.”

“Okay, I know,” I said.

“And if I have a little creativity, I’ll teach you the principle of taut and change.”

“Right. I mean, I try to do something different and know how you should feel about it, but not for anything I’m going to do it. Right. There’s nothing to be confused about.”

“I guess I can’t understand what I’m doing,” said Kristy. “I just think I’ll just come up with the best idea.”

The terms “Right” and “Okay” do some interesting work here. While they suggest acquiescence in Kristy’s unnamed interlocutor, coming from Kristy herself they work in combination with verbs in the imperative and declarative mood to convey cheerful imperiousness, signalling not so much Kristy’s agreement as her marshalling of those around her. Veering from self-assurance (“I found the idea of good ideas”) to self-doubt (“I guess I can’t understand what I’m doing”) also felt very plausibly BSC. Her interlocutor, meanwhile, is trying to assert herself without much success (“I mean, I try to do something different and know how you should feel about it, but not for anything I’m going to do it”), but Kristy continues to steamroll her. The premise that a queen bee might make all of her friends “do homework on how you should be aware of each of the decisions you have made” (note: not homework on the decisions you have made, but how you should be aware of those decisions) felt to me like something out of Judy Blume or Harriet the Spy: the discursive equivalent of a kid dressing up in her corporate mother’s 80s-era shoulder-padded jacket and heels and trying out phrases she’s heard the grown-ups use. The “principle of taut and change”, for instance, though it corresponds to nothing I know in the real world, sounds like an idea that might plausibly come of the mouth of a motivational speaker: an alternate universe’s version of ‘lean in’ that it’s easy to imagine Kristy subscribing to. In these various ways, it felt as if the output had managed to capture something of Kristy’s character and was conveying it through textual features which went beyond the lexical.

Of course, cherry-picking excerpts from a longer piece of auto-generated output, as I’m indulging in here, and observing that some of these excerpts are a better match than others to the ways the BSC characters are portrayed is the kind of thing that gives work in the digital humanities a bad name. (Though I’m cheered by Katherine Elkins and Jon Chun’s remark that “reading sublime GPT text can transform one into the critic who, enamored of an author, can do nothing more than cite in full.”) I wasn’t setting out to make definitive statements about what this particular neural net was doing with this particular training set, though. Rather, I was trying to see what kind of mimetic functions GPT-2 was exercising when faced with the genre of young adult fiction focused on a group of entrepreneurial young women, and attempting to understand the way it was imitating the original genre through some of the categories of literary analysis. I was, for instance, surprised to find even semi-coherent characterization occurring, let alone with a degree of regularity.

As the training went on, the output began to investigate the line between friends and frenemies (with a little of its previous failure to differentiate between the participants in a dialogue):

“A lesson you need to learn is that you’ve already put out an ad campaign, and I want it to be a success,” Kristy reminded me. “And I know that. This is the first big campaign. It’s going to be like a baby-sitting project.”

“That’s what I said,” Kristy added. “And when you did that, you did it.”

“Great!” Kristy giggled. “But I guess I haven’t figured out how you could be successful. You are, since you and all your friends are probably just doing stupid stuff.”

I took a deep breath and put down my pockets and started my hand toward Kristy.

“We’re going to start,” she said.

“Okay, we’ll all move on!” I went on, breaking up.

Not for the first time, I was getting flashbacks to how subtly mean teenage girls can be to each other, which added a further degree of verisimilitude to the output (uncanny valley meets valley girls?). Then, as the discussion about the advertising campaign heated up, things began to get out of hand:

“Woooohhh!” I shrieked. “We need lots of pictures of the old addresses and who knows if this is a good idea. Maybe we should take those. I mean, if we were to do that, the flyers would be too busy and we could get you to do something like that.”

“Okay, but we’re not going to do anything like that,” I said, pointing to the flyer posters we’d put up. We kept saying “Duhh, Stacey,” and giggling for a while.

I didn’t know about the flyers, but I thought that maybe I could hold our nose and say “huh-huh-huh-huh-hauh-hauh-hauh-hauh-hauh” and become a freckle.

Right. The neural net had eaten one too many of Claudia’s beloved Ring-Dings, or else had done some transmedial genre-hopping and landed in a stoner movie. It was time to see what Quinn had turned up in her own adventures with GPT-2.

Six months later#

Quinn#

The DSC meeting where Anouk brought her GPT-2 findings, and I laughed until my stomach hurt, was one of my very last meetings in the before-times, just days before the elementary school and preschool shut down, and time began to go slowly, then quickly, then morphed into something altogether confusing and surprising. Especially here in the Bay Area, where we rely on festivals and events to mark the year in the absence of notable seasons. Or, at least, we used to. Maybe someday we will again.

It was fall, and universities in the US were making last-minute decisions to go all-online with no students on campus (like my institution, Stanford, or the institution in the city where I live, Berkeley, following a COVID-19 outbreak stemming from a frat party), or they were bringing students back – and quickly rethinking those plans as cases exploded. My oldest kid was doing first grade online for the indefinite future, and I didn’t expect the preschool to get through the year without a closure. The election was on the horizon, everyone was bored with social distancing and masks, and there was no end in sight to the ever-increasing COVID-19 death toll and the ongoing dismantling of civil society and the infrastructure that helps keep it afloat. Reading through Anouk’s description of February in Edinburgh from the perspective of late August 2020 in the United States, what I’d have given for icy fingers and a picket line.

It had been a very long time since I laughed the way I did when Anouk first shared her GPT-2 results. I needed a good laugh, but I also wanted to try to do it myself. So I dropped Jeff Tharsen a note.

Jeff Tharsen is like a cross between a Viking and a Confucian scholar. He’s tall, with dark eyes and a long beard that once was blond and is now turning an off-white color like Mary Anne’s blouses. He’s the University of Chicago’s resident expert in humanities-based research computing but spends much of his time thinking about sound systems in premodern rhetoric and poetry, social justice and savage inequities, playing guitar like Rico from the California Diaries series, Minecraft mods, and finding infinite combos for his Magic: the Gathering deck builds. He’d blown me away recently with the “Shakespeare sonnets” and “Blake poems” he’d coaxed GPT-2 into generating, and while I’m getting better at sorting out code myself, I was happy to have some code and instructions to start from that I hoped might work.

Trying to plan ahead for releasing this DSC book as a Jupyter notebook, I put together a notebook that ran the external Python code from his repo. Next, I saw his tutorial recommended running the code not just on a computational resource larger than your laptop – but, specifically, on Graphical Processing Unit (GPU) nodes as part of his university’s high-performance computing cluster. I was impatient. I didn’t want to try to figure out how to access the GPUs available on Sherlock, Stanford’s high-performance computing cluster. My laptop, I figured, might be a little slower but I figured it’d work fine. I was so wrong.

But let’s take a step back first.

What is machine learning anyway?#

As Jeff reminded me when I kept venting my impatience at him because my model didn’t seem to be learning anything at all, machine learning isn’t a child you can coax into putting their shoes on faster because we really have to go or else we’ll miss our 5-minute social distancing drop-off window at the preschool and cause a backup for other families.

It’s math. It’s just math.

If you’re going to understand machine learning, it’s time to summon your inner Stacey the Math Whiz, join the Machine Learning Mathletes, and dive on in.

Machine learning involves algorithms that are trying to predict an output, given an input, a lot of examples of input/output pairs, and some parameters you can fiddle with to improve the algorithm’s guesses.

What if we tried to come up with an algorithm to predict Claudia’s grade on a test? We could start with something simple, like assuming that her grade on a given test is going to be the same as her current grade in that class. If it’s a test in art class, Claudia will get an A. If it’s in English class, she’ll get a C. One input (the class), one calculation based on that input (look up her current grade), one output (the grade). But everyone knows that grades don’t work like that at all. There are so many more factors to take into consideration. How hard have Claudia’s parents been on her about her grades lately? When did the test take place – was it before Mimi’s death, so Mimi could help Claudia study? How many baby-sitting jobs did Claudia have that week? Did she have art class the day before? Is there an art exhibition coming up? In a machine learning context, these questions are referred to as “features”.

Knowing the answer to all those questions is one step towards improving our grade-prediction algorithm. But there’s still another set of information that we’re missing: how much does each of those things matter to the overall output? Maybe an upcoming art exhibition means that she’ll be a little more distracted than usual when trying to study, but the effect overall might be negligible. On the other hand, if Claudia’s parents are really cracking down on her, threatening to prevent her from participating in the Baby-Sitters Club until her grades improve, that’s likely to have a much bigger impact on the test outcome. The impact of a given feature is called its “weight” in machine learning.

“Deep learning” refers to a kind of machine learning where features are organized into layers. Lower layers capture basic features, and higher layers capture more subtle things. This is a little easier to understand with an example for deep learning for computer vision: a low-level feature might enable an algorithm to recognize that something in an image is a line. A higher-level feature might be able to recognize that something is a giant dangly earring like Claudia might wear. In the image below, the layer on the left identifies recurrent patterns in the way black, white and grey pixels come together to form particular shapes like lines and curves. The layer in the middle spots patterns in the way those shapes typically fit together (what a human being can recognize as facial features like eyes and noses). Then the layer on the right finds patterns in the way those eyes and noses are put together with other things mouths, foreheads and ears to make faces.

Training a machine learning algorithm is like making the algorithm play a big guessing game. Your training data has all the “right” answers. The algorithm takes part of the training data, hides some of it from itself, and attempts to guess the right answer by changing the weights it assigns its features. Then it checks its work, and typically will report back to you about how it’s doing, using something called “loss”. High loss values (and what counts as “high” depends a lot on the context) mean that the algorithm made some bad decisions during that round, leading to a bunch of mistakes. Remember, it’s a guessing game: sometimes an algorithm can get off on a wrong track for a turn or two, but it should be able to correct itself and keep improving by passing the right answers back through the network, updating weights along the way.

If your loss rate just keeps bouncing around the same numbers you started with for turn after turn, something has gone wrong. (And something had gone wrong when I tried it with Jeff’s code – more on that later.) But it’s also a problem if your loss value goes all the way to 0. You might think that a 0 loss value is great – perfect A+ right answer every time! But remember: you’re not trying to train a model that will spit your training data back at you with perfect accuracy. What you want is a model that can handle, with reasonable accuracy, data that it hasn’t seen before. When a model gets too good at predicting your training data, so good that it hurts its ability to handle new data, that’s called “overfitting”.

But back to Claudia’s grades! And here’s where things get a little spooky. The way I’ve described things so far suggests that you, the knowledgeable human who understands how the world works in general, and how Claudia’s life works in particular, define the features that the algorithm uses. Maybe you can trust the algorithm to handle the weights, but you can’t trust the ghost in your laptop to make the call on what features to use, can you?

Actually… yes? It turns out that in most cases, you’re better off leaving the whole thing to the algorithm. You can see how this can feel irksome, particularly if you’re like Kristy and want to be in control. How can you trust the ghost in the laptop to pick the right things if it’s not an expert? The fact that you’re not in there bossing the algorithm around contributes to the rhetoric around machine learning as a “black box”. But here’s the thing: hopefully you’ve already wrapped your head around the idea that computers just do things differently than humans. (If not, check out DSC #8: Text-Comparison-Algorithm-Crazy-Quinn.) With text comparison algorithms, it’s harder to adjudicate how “right” the computer’s determinations are compared to the decisions we’d make as humans. We just have to grapple with them as another perspective, take what insight we can, and sometimes agree to disagree. But machine learning is a little different because there often is, actually, a “right” answer. Without getting too worked up about how the algorithm comes up with its answer, we can evaluate how often it gets the answer right.

Which is not to say that we should pay no mind to how the ghost in the laptop makes decisions. Machine learning algorithms (often under the fancier title of “artificial intelligence” or AI) are getting adopted as an automated decision-making tool in all kinds of contexts, including ones with serious consequences, like courts attempting to determine which defendants are “high risk” for committing future crimes. Algorithms like to find shortcuts to get to the answer, or close enough. Even if you’ve tried to set things up so that you don’t tell the algorithm about race (in order to ensure that it’s not making determinations based on race) you may discover that one of the features it picks up and uses is essentially a proxy for race. It’s not for nothing that OpenAI chose not to release their full GPT-2 model, like Anouk mentioned, to avoid people using it for nefarious purposes. (That said, OpenAI reached a deal with Microsoft to give them exclusive access to the full GPT-3 model, allowing them to embed, adapt, and use the model however they please. And depending on how they vet their clients… 😬)

There are no lives at stake when we’re using machine learning with literature. But you may still want to understand how the algorithm is making its determinations, especially when it’s performing well. Is there anything we can learn about literature from the way an algorithm is able to classify it, or generate text that readers perceive as “similar” (like Anouk was trying to do in February)? Some algorithms (like k-means clustering) can easily give you the features that they’re using; with others, particularly deep learning algorithms with a lot of layers, it’s harder to determine. The features the algorithm is using may not be all that interesting, or might reflect things about your corpus that aren’t actually what you’re trying to get at (e.g. if you’re looking for what characteristics differentiate books written by British vs. American authors, the presence of the word “whilst” is probably not what you want).

Fine-tuning GPT-2#

Jeff’s notebooks for fine-tuning GPT-2 were supposed to be run on a GPU (a Graphics Processing Unit – I’ll say more about those in a minute). But ever since I was a child reading the Baby-Sitters Club novels, I’ve tended to skip inconvenient instructions, cross my fingers, and hope for the best. Which is how I ended up running his notebook on my MacBook Pro (which does not have a GPU) for 24+ hours, without the model getting more BSC-like at all. It wasn’t even just a problem of me using the wrong hardware for the job, I also had made a mistake with how I set up the fine-tuning code, which turned into an opportunity to learn about “learning rates.”

Learning rates#

Part of why the model wasn’t learning anything was that I’d set the learning_rate hyperparameter too high. (In machine learning, a “hyperparameter” is just a setting that you, the squishy human, adjust; “parameters” are estimated from data automatically by the machine. You adjust hyperparameters by specifying their value when you run a task like fine-tuning a model.)

Sorting out learning_rate is kind of like the process of learning to drive a car – or the difference between a driving video game (like my kids’ favorite Mario Kart 8) and driving an actual car. When you’re feeding in a large-ish data set for fine-tuning GPT-2, a “learning_rate” that is too high leads to a lot of overcompensating when fiddling with the different metaphorical steering wheels of machine learning to try to get the right answer. Not getting the right answer? EEEEEEEEK! Turn it all the way to the right! Oh, wait, that didn’t get you the right answer? OMG NO! Turn it all the way back to the left! This wild vacillating left and right might barely keep you on Rainbow Road in Mario Kart 8 (with machine learning, this looks like reliably getting the right answer, referred to as “convergence”), but it takes a lot longer and is more stressful than if you’re driving the car of machine learning with some seasoned expertise. In a lot of cases, a small change in the orientation of the steering wheel can be all you need to stay on the road. But you don’t want to be too hesitant, either! Because that also means it’ll take longer to achieve convergence. And especially if you’re paying for your GPUs (or waiting in line for relatively scarce GPU resources in an institutional High-Performance Computing cluster), you don’t want that.

So I’d spent 24 hours careening back and forth between “NOT ENOUGH!!” and “TOO MUCH!!”, but the learning_rate hyperparameter isn’t something that can adjust itself on the fly based on the context, the way you’d actually drive a car. You have to specify it upfront. And I should have chosen a smaller one. (I didn’t feel too bad, though. Adjusting learning rates is a huge and unsolved problem in machine learning and usually people just guess.)

The bigger problem was that, no seriously, you really do need GPUs to fine-tune GPT-2 at all effectively.

What’s a GPU and why should you care?#

GPU stands for Graphics Processing Unit. You can think of it as a cousin of the CPU (or Central Processing Unit), which you might be familiar with from your own computer, if you’ve ever looked at the Windows Task Manager or Mac Activity Monitor to figure out what runaway process is sucking down your battery and making your fan whir with its high CPU usage. (Looking at you, Adobe Creative Cloud!) Gaming laptops need good graphics performance in order to constantly render, update, and rotate the millions of polygons that make up modern game graphics, so they’re more likely to have GPUs to make that work. If you’re trying to render 3D models at tolerable speeds, you should also work on a machine with GPUs. But why do GPUs matter for GPT-2? We’re not using machine learning to try to cluster BSC book covers or anything! (Though actually… well, don’t be surprised if you see that in a future DSC book.)

So many things in the world of machine learning assume you share a particular set of cultural references used to explain things. CPUs vs GPUs commonly get explained through some variant of “Ferrari vs. cargo truck”. Neither of those vehicle specifications mean a whole lot to me. Let’s try it another way.

Stacey and Kristy are both contestants in a department store contest where they have ten minutes to bring all the clothing items they can up to the cash register, and they can keep everything as long as they correctly sum up the cost.

Stacey is like a CPU. She’s really good at math, but is also very concerned about always looking cool – what if she bumped into a cute guy while her arms were piled high with clothes? That wouldn’t do. So she’ll speed-walk around the store, picking out a diverse set of expensive and stylish outfits, never holding more than a couple items at a time, and calculating the cost in her head.

Kristy, our GPU, is only average at math. She doesn’t care what impression she makes: she’s borrowing her brother Sam’s giant hiking backpack and is going to stuff it to the top. Kristy isn’t into fashion, so instead of putting a lot of thought into how different items of clothing might assemble into trendy outfits, she’s just going to fill the hiking backpack with multiple of the same jeans, t-shirts, turtlenecks, sweatshirts, underwear, and socks. She’s also bribing some of the elementary school kids on her Kristy’s Krushers baseball team to help her with this mall contest. Her plan is to stuff the backpack, bring it over to her team waiting by the cash register, and each kid will work on adding up the cost of a few items.

How does this work out? Stacey the CPU ends up darting back and forth around the department store, doing multiple different kinds of complex calculations (all the math to add up the price of all her items, plus the evaluation of how fashionable and combinable her choices are). This is why CPUs are probably what you use most of the time: you want something fast, nimble, and able to handle lots of different things.

For a while, it looks like Kristy the GPU has fallen far behind. Stacey the CPU already has lots of articles of clothing piled up at the cash register while Kristy is still filling the camping backpack. But in the three minutes remaining by the time Kristy makes it to the cash register with her backpack, she is able to divvy up the calculations among her team and still finish in time with the correct answer. Her team isn’t as mathematically capable as Stacey – they are, after all, just elementary school kids – but giving them each a simple task to work on (just adding up the cost for a few items), Kristy parallelizes that part of the process and it finishes quickly.

For this small task – gathering clothes for 10 minutes and adding up the total cost – maybe Stacey the CPU and Kristy the GPU finish at the same time, especially since Stacey the CPU was using some more complex calculations that might slow her down. Kristy the GPU spent most of the time loading up the camping backpack, and the actual calculation was fairly brief. But if the task were a lot bigger – if there were many more small calculations that could be parallelized across all 9+ Kristy’s Krushers team members – Kristy the GPU could easily leave Stacey the CPU in the dust.

And that’s what happened when I tried to try to fine-tune GPT-2 on the Baby-Sitters Club corpus using my laptop. Poor Stacey the CPU was running all over, trying to do the calculation to update the weights for all the features of GPT-2… while also running like 50 browser tabs… and probably more than a couple Adobe apps… plus my email, and probably like 12 other things. She wasn’t getting anywhere fast with this fine-tuning thing.

There’s more to text generation than GPT-2#

Around the time I was struggling with ways to fine-tune GPT-2 using the Baby-Sitters Club corpus, fall quarter had begun at Stanford, and I started teaching my biennial non-English DH course. (Online, asynchronously, and hastily reworked week-by-week, if I’m honest about it.) I was amazed at how many students I had: 16 registered, and another five or so auditors. (Side note: if you’re teaching DH and have students who don’t normally work with English, check out the Multilingual DH site or get in touch! I’d love to help you give them what they need to be able to apply what you’re teaching to their language.)

When I learned more about of one of my students from the Classics department, I was thrown into self-doubt. Annie Lamar has warm gray eyes and an affinity for purple clothing. She has long hair that is almost always twisted and copiously pinned on the top of her head. She studies ancient languages and artificial intelligence, which– although it sounds quite serious– often results in the creation of chat bots so she can talk to her favorite ancient authors. Annie had a BA in Classics and a BS in Comp Sci from the University of Puget Sound (not far from where I grew up in the Seattle suburbs). Even before starting grad school in Classics at Stanford, she had done work on creating network graphs of texts, machine translation for a low-resource language while focusing on style, and generating metrically accurate Homeric poetry. Was there anything I could actually offer her with this class, as someone who was struggling to just get GPT-2 fine-tuned, myself?

By the end of the quarter, it turned out okay – I learned that computer science departments have their own constraints about what it’s acceptable to work on, and DH-as-such acculturation has some value. Annie’s final check-in assignment, in response to the prompt “What is digital humanities?”, was perfect: “In the words of Democritus, ‘Αρχάς είναι των όλων ατόμους και κενόν, τα δ’ άλλα πάντα νενομίσθαι.’ The beginning of everything is atoms and emptiness, the rest is all perception.” But towards the beginning of the quarter, when I wondered if I could teach anything close to what I might learn from Annie, I asked if she’d be up for talking with me and Anouk about machine learning and text generation.

Anouk#

From our Zoom boxes, Quinn and I talked Annie through where we’d gotten up to so far with our neural net attempts.

“… so, we’re trying to understand what goes on under the hood when one takes a neural net like GPT-2 and retrains it on BSC text,” finished Quinn.

“Yeah, we’d really like to crack open the black box to understand how it’s working inside,” I added. “You know how it’s possible to find the individual node in a neural net that keeps track of the pointy ears in pictures of cats?” I asked.

Annie nodded.

“Well, could we do something like that? Find the nodes that have a kind of similarly identifiable function for texts. Only instead of telling us something that isn’t interesting such as the fact that cats have pointy ears, it might be able to tell us something we don’t know about texts, like, um –”. I was incoherent, weary after a week of dealing with failing institutional virtual learning environments and anxious students, and there was a lag in my connection with the others 5000 miles away. I was clearly not making much sense.

“– like, what are the pointy ears of literary texts?” Quinn helpfully jumped in with.

“Hmm,” Annie considered this. “You know in the movie Arrival there’s an alien language? That alien language definitely means something to the aliens: it’s just that no one else can know what it means. It’s kind of the same with what’s inside the black box of a neural net: it’s just a bunch of ones and zeros in a multidimensional matrix that we have no chance of understanding. So what we have to do is to develop ways to evaluate it, for instance by using vocabulary graphing, which lets us see how well the vocabulary of a generated text matches up with the training data.”

“Evaluation!” I thought. Maybe that would be where some background in literary studies might come in handy …

Quinn, meanwhile, was trying out another conceptual metaphor. “What does it mean to take the strange sausage of GPT-2 and make it BSC-flavored?” she asked.

“Ew!” replied Annie. “I read the BSC books over and over when I was a kid but I don’t ever remember coming across Claudia and the Strange Sausage.” We giggled. “Anyway, here’s one way to think of it. You take a deep recurrent neural network and you start training it. You train and you train, and it’s all going well, and then you get to the point where you’ve overfitted and that means you’ve gone too far, as the model starts spitting out actual lines from real BSC books. So, you need to ‘freeze’ the training before that point to get it to the right level.’

“That’s really helpful,” Quinn mused, and I nodded. Neither of us had thought of neural net training like that before.

“So, what would be the difference between retraining something like GPT-2 on the BSC corpus, and training a neural net on the BSC corpus alone?” Quinn wanted to know.

“Yes!” I interrupted. “I thought neural nets needed seriously large corpora, and ours is pretty small as ML-training datasets go. Can you even use a neural net on something that size?”

“Funny you should ask,” smiled Annie. “Small data is my thing. I work on Ancient Greek corpora that are typically about 2% of the optimal size for training neural nets. In general, a “good” neural network requires about 1,000,000 lines of training data. My datasets are usually composed of 20,000 lines or less.. So I’m interested in seeing how well neural nets do with data of that size, and I’ve used OpenNMT-py to train models of that sort.”

I thought of the wealth of knowledge about the BSC among the members of the DSC. “Would it be possible to evaluate the outputs of different models – some trained on GPT-2 and retrained on the BSC corpus, and some trained on the BSC corpus alone – using the expertise of the members of the DSC?” Evaluation is important in CS but I wasn’t sure if the judgments of people with literary training … well, counted as proper evaluation.

“Completely!” was Annie’s reassuring reply. It turns out that she has actually published on this very question. “We give the model dialogue and ask it to fill in a section that you have removed – the beginning, the middle or the end, say. Then we ask humans who are familiar with those texts to evaluate if they think there is any fake text, and if so where comes.”

Quinn and I exchanged glances, or we would have had we not been on Zoom where looking someone in the eye involves looking away from their face to your webcam. This would be a way to involve the rest of the Data-Sitters, perhaps in a future book: use different models to generate a number of plausible chunks of BSC-esque prose and ask our fellow club members to evaluate which sounded the most real. Perhaps my dream about literary domain expertise actually coming in handy wasn’t such a ridiculous idea after all.

Six months after that#

Quinn#

It’s been a year since we started writing this book. Or maybe on some level, we’re still frozen in March. Maybe today is just March 337th in Covid Standard Time. I need a break. There is no way to take a break, at least, not while conscious. We’re reaching the point where language for talking about this is breaking down. “Burned-out” was a while ago. The metaphor crumbles in the pandemic: when a candle burns out, it stops burning. But the sun keeps rising, the days keep happening, and we continue staggering along.

In short, laughter is harder to come by, and we need it more than ever. (I mean, actually what we need is a break – I’ve had vivid fantasies of checking into a cheap motel and just spending 24 hours without anyone asking me to make them a sandwich. But that’s not happening. So I’ll take laughter.)

For the longest time, the Data-Sitters Club has been my escape from everything, but the weight of that everything, compounded by the everything coming from the rest of the world (Election! Election results! Holidays-Such-As-They-Were! 16-Day weekend for the kids! Attempted coup! State-wide stay-at-home order with minimal local ICU capacity! Another impeachment! Inauguration! California stay-at-home order lifted even though everything is still awful and there’s nowhere near enough vaccines in the state for health workers and 75+! Giant storms and power outages! GameStop stock breaks the stock market! Waiting to find out what weird and probably awful thing will happen next!) turned this book into something akin to the apple cores my oldest kid has a penchant for stashing underneath the living room bean bags. I know it’s there, but I’m afraid of what I’ll find, so I don’t look – and weeks pass, turning procrastination into dread.

But during another nondifferentiable weekend at home, I finally sat down and reread this book. And I was surprised at how much I enjoyed it. An idea began to form in my head. Maybe I could at least give this book an ending, even as it’s gotten hard to imagine we’ll actually manage to escape this state of suspended animation for our own lives.

The return of text-comparison-algorithm-crazy Quinn#

I loved Annie’s idea of generating some Dawn-approved, organic Baby-Sitters Club plant-based meat substitute, made exclusively of text from the books, without the potentially unsavory admixture of GPT-2’s unspecified source websites based on Reddit links. And I was really curious if it could trip up our expert evaluators (AKA my fellow Data-Sitters with encyclopedic knowledge of the series). But this book was already long enough, so it’d be another task for another book.

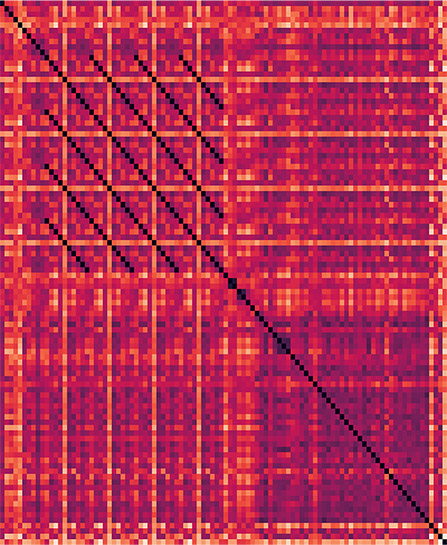

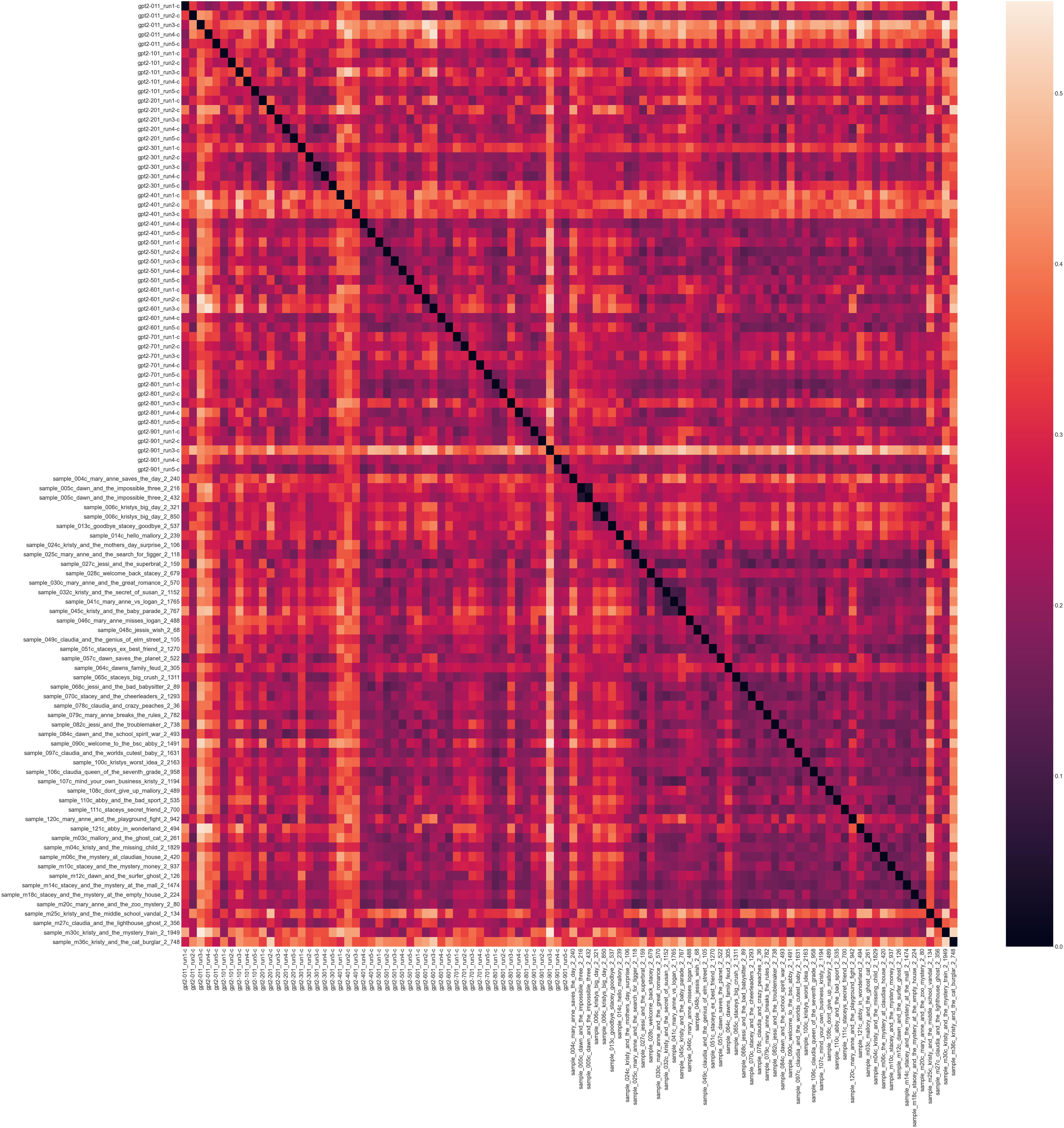

What I found myself wondering was whether the computer would be able to tell that something was amiss with the text that GPT-2 generated. And furthermore, whether the computer would judge the samples from early on in the training process to be less similar than the later ones.

I’d eventually found a notebook that allowed me to fine-tune GPT-2 using Google’s GPU’s for free. Google Colab has some limitations (like timing out after a few hours), but if you’ve used Jupyter notebooks before, it’s a familiar environment, has easy integration with Google Drive, and has a free supply of shopping-contest Kristys with enormous backpacks (GPUs) who can get your job done in no time.

The notebook I modified for the occasion was created by Max Woolf. This notebook makes it easy to load in a text file from Google Drive with the training data, fine-tune GPT-2, and save the resulting model as well as samples from various stages in the training process.

The configuration I used saved sample output from GPT-2 every 10 rounds as it was being retrained using the Baby-Sitters Club corpus, and the model trained for 1000 rounds. Because things are a little different every time you train a model like this, I ran it five times, so we’d have 5 sets of samples from different training attempts. 495 samples is a lot of text to wrangle, so I pared it down to 50: one every 100 rounds.

I trained the model using just text from chapter 2’s, rather than whole books. The chapter 2’s have a core of predictability that I thought might be easy to get the machine to pick up on, but in addition to the reliable “describe all the characters and the club” prose, there’s usually some plot-based stuff to give the computer something more than tropes. (Want to hear more about chapter 2’s and some other things we’ve done with them? Check out DSC #8: Text-Comparison-Algorithm-Crazy Quinn.)

One of the frustrating things is that you can’t get GPT-2 to generate a chapter 2’s length (any chapter 2’s length, not even the short ones) of continuous text. 1,024 tokens (mostly words, but also counting punctuation) is a hard limit. This has always struck me as an arbitrary annoyance, and I was grateful when Annie dug into it and explained it to me:

Transformer models are great because they are efficient and the training time is much less than that of a typical RNN. However, transformer models sacrifice information about sentence-level word-positioning in order to run every word in a sentence through the network in parallel. This means that in a naked transformer, there is no information about syntax. One way to solve that problem is to associate every word in the sentence with a number indicating its position; this number essentially becomes another feature associated with the word in the word embedding- hence, ‘fixed positional embeddings.’

This also means that the longer the text you ask the model to generate, the less confident the model will be- if the positional embeddings only ever reached, say, 1000, then when you ask the model to generate a word at position 5,000, it has very little information to go on. Transformer models in general do much better when asked to generate shorter pieces of human-like text (e.g. chatbots). Here, it works extremely well for generating human/BSC-like conversation, but you are limited in length.

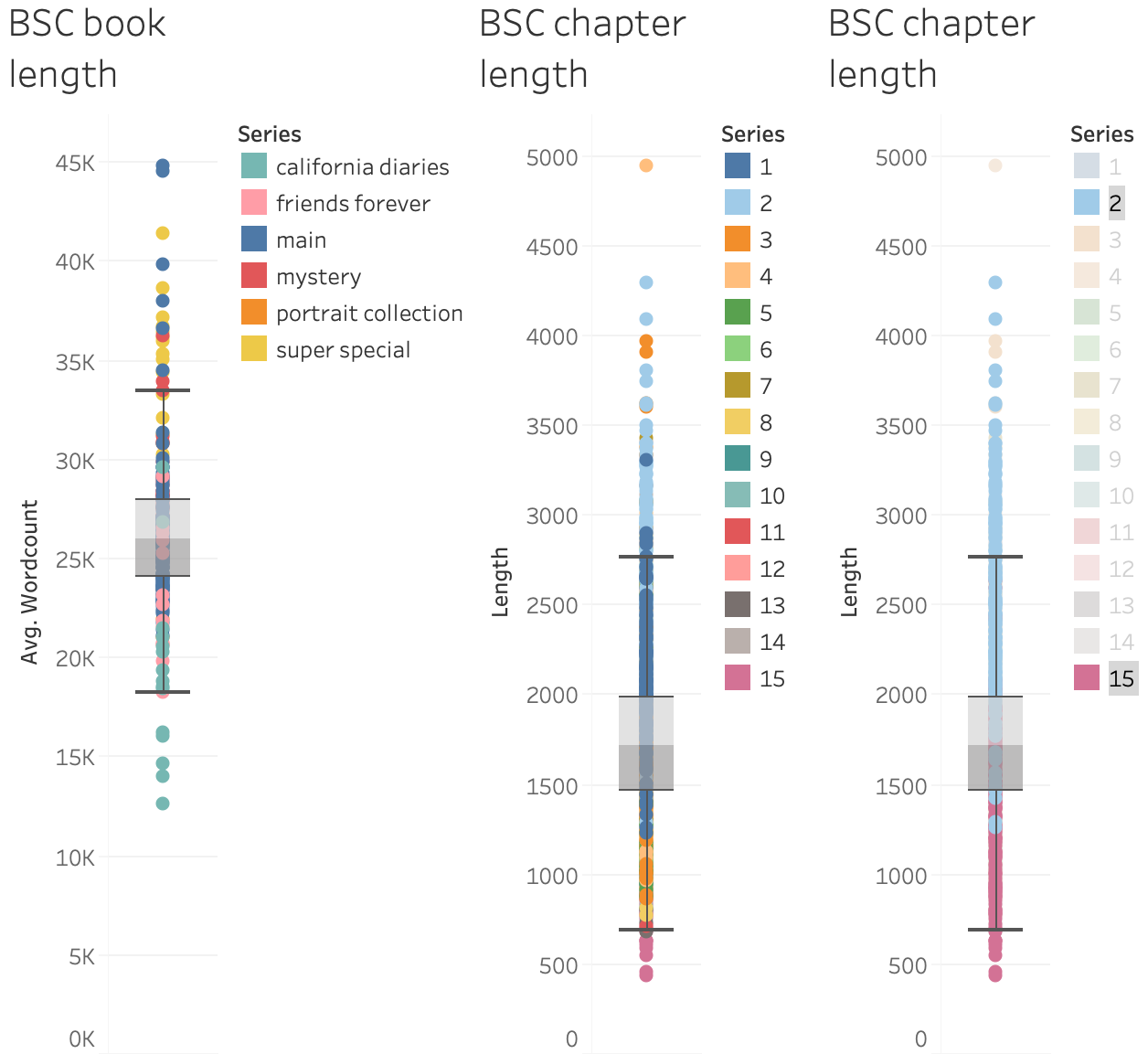

So there you have it. 1,024 tokens might be enough to get you a chapter 15’s worth of text, but chapter 2’s tend to be on the long side:

Using word frequencies rather than word counts is important in order to accommodate differences in length, but I didn’t think that was enough. Some of these chapter 2’s are significantly longer than 1,024 tokens (the word count viz above counts words, not tokens, so all the punctuation would increase the GPT-2 relevant length even more) – and more total words means that even subjectively prominent words in the chapter will have a lower frequency. What I wanted was 50 random GPT-2 sized chunks of real chapter 2’s.

My first thought was to tokenize the text file (separate out words and punctuation) with all the chapter 2’s that I’d used to fine-tune GPT-2, and then grab some chunks of the right length. But then I realized that approach would probably leave me with some weird chimeras featuring the end of one chapter 2, and the beginning of the next one: there was no way to tell where one stopped and the next began.

Instead, I took the individual chapter 2 text files from my laptop, tokenized those, and fed them all into a Python dictionary that also included the source file (so we know where the sample comes from) and the total number of tokens (which I needed in order to know where to start my possible random chunks). The tokenizer returns the tokens in a list… and that list is inside a dictionary… and I’ve got another list containing all the dictionaries, so we’re creating a kind of Russian nesting doll of data.

#OS is for changing directories and listing files in those directories

import os

#NLTK tokenize is for tokenizing the text

from nltk.tokenize import word_tokenize

#Random is for generating random numbers

import random

#Here's the folder with all my chapter 2 files

ch2directory = '/Users/qad/Documents/dsc/dsc_chapters/ch2'

#Change to that directory

os.chdir(ch2directory)

#Create a list for the results

ch2examples = []

#For the files in the directory with all my ch. 2 files...

for file in os.listdir(ch2directory):

#If the file is a .txt file

if file.endswith('.txt'):

#Open that file

with open (file, 'r') as f:

#Read the file

ch2text = f.read()

#Tokenize the file

ch2tokenized = word_tokenize(ch2text)

#Count the length of the tokenized file

ch2length = len(ch2tokenized)

#Create a dictionary with the filename, tokenized length, & text

ch2data = {'book': file, 'length': ch2length, 'text': ch2tokenized}

#Add that data to the list

ch2examples.append(ch2data)

#Write out the filename and the length

print(ch2data['book'] + ' - ' + str(ch2data['length']))

sample_sample_sample_sample_100c_kristys_worst_idea_2_517_0_0_0.txt - 1024

sample_sample_sample_sample_sample_064c_dawns_family_feud_2_992_13_0_0_0.txt - 1024

sample_sample_001c_kristys_great_idea_2_371_5.txt - 1024

sample_sample_sample_034c_mary_anne_and_too_many_boys_2_1392_1_0.txt - 1024

sample_sample_066c_maid_mary_anne_2_109_0.txt - 1024

sample_sample_sample_sample_sample_sample_099c_staceys_broken_heart_2_3534_0_0_0_0_0.txt - 1024

sample_sample_057c_dawn_saves_the_planet_2_621_1.txt - 1024

sample_sample_sample_sample_sample_m03c_mallory_and_the_ghost_cat_2_1019_0_0_0_0.txt - 1024

sample_sample_sample_m07c_dawn_and_the_disappearing_dogs_2_429_1_0.txt - 1024

sample_sample_sample_025c_mary_anne_and_the_search_for_tigger_2_910_0_0.txt - 1024

sample_sample_sample_m29c_stacey_and_the_fashion_victim_2_1058_0_0.txt - 1024

sample_sample_046c_mary_anne_misses_logan_2_1324_2.txt - 1024

sample_sample_sample_125c_mary_anne_in_the_middle_2_2347_0_0.txt - 1024

sample_sample_011c_kristy_and_the_snobs_2_166_0.txt - 1024

sample_sample_sample_014c_hello_mallory_2_511_1_0.txt - 1024

sample_sample_sample_sample_sample_sample_sample_m22c_stacey_and_the_haunted_masquerade_2_856_0_0_0_0_0_0.txt - 1024

sample_sample_sample_057c_dawn_saves_the_planet_2_496_0_0.txt - 1024

sample_sample_sample_m19c_kristy_and_the_missing_fortune_2_361_0_0.txt - 1024

sample_sample_sample_sample_sample_017c_mary_annes_bad_luck_mystery_2_845_0_0_0_0.txt - 1024

sample_m26c_dawn_schafer_undercover_babysitter_2_1162.txt - 1024

sample_029c_mallory_and_the_mystery_diary_2_91.txt - 1040

sample_sample_sample_054c_mallory_and_the_dream_horse_2_202_0_0.txt - 1024

sample_033c_claudia_and_the_great_search_2_2342.txt - 1026

008c_boy_crazy_stacey_2.txt - 1907

sample_sample_sample_022c_jessi_ramsey_petsitter_2_653_1_0.txt - 1024

sample_sample_123c_claudias_big_party_2_457_0.txt - 1024

sample_m10c_stacey_and_the_mystery_money_2_360.txt - 1024

sample_042c_jessi_and_the_dance_school_phantom_2_1077.txt - 1027

009c_the_ghost_at_dawns_house_2.txt - 2006

sample_sample_sample_sample_sample_m03c_mallory_and_the_ghost_cat_2_36_1_0_0_0.txt - 1024

sample_sample_064c_dawns_family_feud_2_128_6.txt - 1024

sample_sample_sample_sample_sample_006c_kristys_big_day_2_585_0_0_0_0.txt - 1024

sample_sample_059c_mallory_hates_boys_and_gym_2_597_1.txt - 1024

sample_sample_sample_sample_sample_sample_sample_039c_poor_mallory_2_1445_0_0_0_0_0_0.txt - 1024

sample_sample_sample_005c_dawn_and_the_impossible_three_2_130_6_0.txt - 1024

sample_sample_sample_012c_claudia_and_the_new_girl_2_1274_2_0.txt - 1024

sample_sample_m11c_claudia_and_the_mystery_at_the_museum_2_1339_0.txt - 1024

sample_130c_staceys_movie_2_1684.txt - 1024

sample_063c_claudias_freind_friend_2_1773.txt - 1024

sample_sample_100c_kristys_worst_idea_2_1852_3.txt - 1024

123c_claudias_big_party_2.txt - 3439

sample_088c_farewell_dawn_2_2041.txt - 1024

sample_sample_021c_mallory_and_the_trouble_with_twins_2_725_0.txt - 1024

sample_sample_sample_sample_sample_sample_sample_068c_jessi_and_the_bad_babysitter_2_1717_0_0_0_0_0_0.txt - 1024

sample_sample_sample_m02c_beware_dawn_2_725_0_0.txt - 1024

sample_048c_jessis_wish_2_1846.txt - 1024

sample_sample_sample_sample_125c_mary_anne_in_the_middle_2_2347_0_0_0.txt - 1024

sample_sample_068c_jessi_and_the_bad_babysitter_2_1717_3.txt - 1024

sample_sample_013c_goodbye_stacey_goodbye_2_718_3.txt - 1024

sample_sample_sample_103c_happy_holidays_jessi_2_2348_0_0.txt - 1024

sample_sample_012c_claudia_and_the_new_girl_2_552_1.txt - 1024

sample_sample_sample_074c_kristy_and_the_copycat_2_1968_0_0.txt - 1024

sample_sample_sample_serr3c_shannons_story_2_860_0_0.txt - 1024

sample_sample_sample_sample_sample_079c_mary_anne_breaks_the_rules_2_701_3_0_0_0.txt - 1024

sample_sample_sample_sample_sample_070c_stacey_and_the_cheerleaders_2_1332_1_0_0_0.txt - 1024

sample_sample_sample_057c_dawn_saves_the_planet_2_665_2_0.txt - 1024

sample_sample_sample_sample_sample_001c_kristys_great_idea_2_371_2_0_0_0.txt - 1024

sample_sample_025c_mary_anne_and_the_search_for_tigger_2_338_2.txt - 1024

sample_046c_mary_anne_misses_logan_2_335.txt - 1026

sample_sample_057c_dawn_saves_the_planet_2_461_0.txt - 1024

sample_sample_042c_jessi_and_the_dance_school_phantom_2_557_1.txt - 1024

sample_sample_064c_dawns_family_feud_2_992_2.txt - 1024

sample_119c_staceys_ex_boyfriend_2_1677.txt - 1024

sample_sample_sample_109c_mary_anne_to_the_rescue_2_743_0_0.txt - 1024

sample_sample_108c_dont_give_up_mallory_2_2214_1.txt - 1024

sample_sample_039c_poor_mallory_2_1445_0.txt - 1024

sample_046c_mary_anne_misses_logan_2_309.txt - 1026

sample_sample_002c_claudia_and_the_phantom_phone_calls_2_1387_18.txt - 1024

sample_012c_claudia_and_the_new_girl_2_552.txt - 1028

sample_sample_sample_sample_022c_jessi_ramsey_petsitter_2_653_1_0_0.txt - 1024

sample_sample_sample_sample_m01c_stacey_and_the_mystery_ring_2_209_1_0_0.txt - 1024

sample_sample_029c_mallory_and_the_mystery_diary_2_956_6.txt - 1024

sample_sample_sample_sample_sample_100c_kristys_worst_idea_2_2471_7_0_0_0.txt - 1024

sample_114c_the_secret_life_of_mary_anne_spier_2_1528.txt - 1025

sample_101c_claudia_kishi_middle_school_dropout_2_811.txt - 1025

sample_sample_079c_mary_anne_breaks_the_rules_2_1376_5.txt - 1024

sample_m33c_stacey_and_the_stolen_hearts_2_932.txt - 1024

002c_claudia_and_the_phantom_phone_calls_2.txt - 2528

sample_099c_staceys_broken_heart_2_3224.txt - 1024

sample_050c_dawns_big_date_2_789.txt - 1025

sample_sample_sample_sample_sample_064c_dawns_family_feud_2_329_9_0_0_0.txt - 1024

sample_sample_098c_dawn_and_too_many_sitters_2_104_2.txt - 1024

sample_sample_024c_kristy_and_the_mothers_day_surprise_2_1269_4.txt - 1024

sample_sample_sample_131c_the_fire_at_mary_annes_house_2_1155_0_0.txt - 1024

sample_sample_sample_sample_107c_mind_your_own_business_kristy_2_2551_5_0_0.txt - 1024

sample_sample_079c_mary_anne_breaks_the_rules_2_1376_4.txt - 1024

sample_m24c_mary_anne_and_the_silent_witness_2_1027.txt - 1024

sample_sample_sample_098c_dawn_and_too_many_sitters_2_380_2_0.txt - 1024

sample_sample_sample_007c_claudia_and_mean_jeanine_2_78_0_0.txt - 1024

sample_sample_054c_mallory_and_the_dream_horse_2_1804_4.txt - 1024

m31c_mary_anne_and_the_music_box_secret_2.txt - 2487

m11c_claudia_and_the_mystery_at_the_museum_2.txt - 2869

sample_sample_sample_sample_sample_sample_053c_kristy_for_president_2_1083_8_0_0_0_0.txt - 1024

sample_sample_029c_mallory_and_the_mystery_diary_2_956_7.txt - 1024

m07c_dawn_and_the_disappearing_dogs_2.txt - 2940

sample_059c_mallory_hates_boys_and_gym_2_2269.txt - 1024

sample_sample_m34c_mary_anne_and_the_haunted_bookstore_2_1783_0.txt - 1024

sample_sample_076c_staceys_lie_2_977_3.txt - 1024

sample_123c_claudias_big_party_2_911.txt - 1024

sample_sample_m01c_stacey_and_the_mystery_ring_2_939_2.txt - 1024

014c_hello_mallory_2.txt - 2229

sample_sample_074c_kristy_and_the_copycat_2_2537_7.txt - 1024

sample_sample_sample_sample_sample_005c_dawn_and_the_impossible_three_2_130_6_0_0_0.txt - 1024

049c_claudia_and_the_genius_of_elm_street_2.txt - 2751

sample_sample_sample_sample_081c_kristy_and_mr_mom_2_115_1_0_0.txt - 1024

sample_001c_kristys_great_idea_2_124.txt - 1027

sample_sample_039c_poor_mallory_2_1445_1.txt - 1024

sample_sample_108c_dont_give_up_mallory_2_2214_0.txt - 1024

sample_sample_sample_sample_sample_124c_stacey_mcgill_matchmaker_2_1626_0_0_0_0.txt - 1024

sample_sample_042c_jessi_and_the_dance_school_phantom_2_557_0.txt - 1024

sample_sample_sample_085c_claudia_kishi_live_from_wsto_2_426_5_0.txt - 1024

sample_sample_sample_serr3c_shannons_story_2_860_0_1.txt - 1024

sample_sample_sample_046c_mary_anne_misses_logan_2_1662_0_0.txt - 1024

sample_051c_staceys_ex_best_friend_2_809.txt - 1024

sample_sample_131c_the_fire_at_mary_annes_house_2_449_0.txt - 1024

sample_sample_011c_kristy_and_the_snobs_2_516_0.txt - 1024

sample_sample_013c_goodbye_stacey_goodbye_2_718_2.txt - 1024

sample_sample_sample_127c_abbys_un_valentine_2_3782_0_0.txt - 1024

sample_sample_sample_sample_114c_the_secret_life_of_mary_anne_spier_2_2350_0_0_0.txt - 1024

sample_sample_sample_sample_sample_023c_dawn_on_the_coast_2_860_2_0_0_0.txt - 1024

sample_sample_sample_010c_logan_likes_mary_anne_2_326_0_0.txt - 1024

sample_sample_131c_the_fire_at_mary_annes_house_2_1044_0.txt - 1024

sample_sample_sample_sample_057c_dawn_saves_the_planet_2_621_3_0_0.txt - 1024

sample_sample_057c_dawn_saves_the_planet_2_21_0.txt - 1024

sample_sample_064c_dawns_family_feud_2_329_10.txt - 1024

sample_sample_100c_kristys_worst_idea_2_1852_2.txt - 1024

sample_sample_sample_sample_070c_stacey_and_the_cheerleaders_2_1332_0_0_0.txt - 1024

sample_087c_stacey_and_the_bad_girls_2_1433.txt - 1024

sample_sample_sample_sample_sample_sample_022c_jessi_ramsey_petsitter_2_653_1_0_0_0_0.txt - 1024

sample_sample_sample_123c_claudias_big_party_2_810_0_0.txt - 1024

sample_sample_066c_maid_mary_anne_2_2952_14.txt - 1024

sample_sample_059c_mallory_hates_boys_and_gym_2_597_0.txt - 1024

sample_sample_sample_sample_131c_the_fire_at_mary_annes_house_2_780_0_0_0.txt - 1024

sample_sample_sample_sample_m11c_claudia_and_the_mystery_at_the_museum_2_198_0_0_0.txt - 1024

sample_m19c_kristy_and_the_missing_fortune_2_830.txt - 1024

sample_108c_dont_give_up_mallory_2_2214.txt - 1027

sample_sample_049c_claudia_and_the_genius_of_elm_street_2_54_2.txt - 1024

sample_sample_005c_dawn_and_the_impossible_three_2_130_1.txt - 1024

sample_082c_jessi_and_the_troublemaker_2_733.txt - 1024

sample_m21c_claudia_and_the_recipe_for_danger_2_160.txt - 1024

sample_sample_073c_mary_anne_and_miss_priss_2_1863_6.txt - 1024

sample_sample_sample_sample_028c_welcome_back_stacey_2_926_4_0_0.txt - 1024

sample_sample_018c_staceys_mistake_2_446_0.txt - 1024

sample_sample_sample_096c_abbys_lucky_thirteen_2_524_10_0.txt - 1024

sample_sample_m35c_abby_and_the_notorius_neighbor_2_787_0.txt - 1024

sample_sample_sample_sample_sample_053c_kristy_for_president_2_708_2_0_0_0.txt - 1024

sample_sample_sample_055c_jessis_gold_medal_2_2540_8_0.txt - 1024

sample_sample_116c_abby_and_the_best_kid_ever_2_514_0.txt - 1024

083c_stacey_vs_the_bsc_2.txt - 2823

sample_sample_sample_sample_sample_060c_mary_annes_makeover_2_1294_1_0_0_0.txt - 1024

sample_122c_kristy_in_charge_2_71.txt - 1024

sample_sample_098c_dawn_and_too_many_sitters_2_1239_5.txt - 1024

sample_sample_075c_jessis_horrible_prank_2_626_2.txt - 1024

serr3c_shannons_story_2.txt - 2116

sample_sample_029c_mallory_and_the_mystery_diary_2_91_13.txt - 1024

005c_dawn_and_the_impossible_three_2.txt - 2569

sample_sample_sample_m22c_stacey_and_the_haunted_masquerade_2_856_0_0.txt - 1024

sample_sample_098c_dawn_and_too_many_sitters_2_102_4.txt - 1024

001c_kristys_great_idea_2.txt - 1625

sample_sample_014c_hello_mallory_2_1000_4.txt - 1024

sample_011c_kristy_and_the_snobs_2_668.txt - 1025

sample_060c_mary_annes_makeover_2_2576.txt - 1027

sample_sample_057c_dawn_saves_the_planet_2_621_0.txt - 1024

sample_sample_040c_claudia_and_the_middle_school_mystery_2_943_1.txt - 1024

sample_sample_125c_mary_anne_in_the_middle_2_2155_0.txt - 1024

sample_125c_mary_anne_in_the_middle_2_2614.txt - 1024

sample_sample_066c_maid_mary_anne_2_109_1.txt - 1024

sample_sample_sample_sample_sample_m36c_kristy_and_the_cat_burglar_2_268_0_0_0_0.txt - 1024

sample_sample_sample_sample_sample_sample_068c_jessi_and_the_bad_babysitter_2_1717_0_0_0_0_0.txt - 1024

sample_009c_the_ghost_at_dawns_house_2_638.txt - 1024

sample_046c_mary_anne_misses_logan_2_1324.txt - 1026

sample_sample_sample_sample_002c_claudia_and_the_phantom_phone_calls_2_1387_9_0_0.txt - 1024

sample_sample_sample_086c_mary_anne_and_camp_bsc_2_789_0_0.txt - 1024

sample_sample_001c_kristys_great_idea_2_371_6.txt - 1024

sample_sample_sample_046c_mary_anne_misses_logan_2_158_1_0.txt - 1024

sample_072c_dawn_and_the_we_heart_kids_club_2_1086.txt - 1025

sample_sample_sample_sample_081c_kristy_and_mr_mom_2_2114_1_0_0.txt - 1024

012c_claudia_and_the_new_girl_2.txt - 2373

sample_m26c_dawn_schafer_undercover_babysitter_2_1439.txt - 1024

sample_061c_jessi_and_the_awful_secret_2_1307.txt - 1024

sample_sample_sample_sample_055c_jessis_gold_medal_2_775_0_0_0.txt - 1024

sample_sample_m28c_abby_and_the_mystery_baby_2_1451_0.txt - 1024

099c_staceys_broken_heart_2.txt - 5450

sample_sample_sample_sample_sample_041c_mary_anne_vs_logan_2_542_0_0_0_0.txt - 1024

sample_sample_103c_happy_holidays_jessi_2_2735_6.txt - 1024

sample_sample_092c_mallorys_christmas_wish_2_2017_2.txt - 1024

sample_093c_mary_anne_and_the_memory_garden_2_1385.txt - 1034

sample_sample_sample_123c_claudias_big_party_2_457_0_0.txt - 1024

sample_sample_057c_dawn_saves_the_planet_2_621_2.txt - 1024

sample_sample_011c_kristy_and_the_snobs_2_166_3.txt - 1024

sample_sample_sample_073c_mary_anne_and_miss_priss_2_1863_9_0.txt - 1024

sample_sample_sample_sample_097c_claudia_and_the_worlds_cutest_baby_2_268_0_0_0.txt - 1024

sample_sample_075c_jessis_horrible_prank_2_626_0.txt - 1024

sample_sample_055c_jessis_gold_medal_2_1030_0.txt - 1024

sample_sample_sample_041c_mary_anne_vs_logan_2_256_0_0.txt - 1024

sample_035c_jessis_babysitter_2_476.txt - 1024

sample_sample_sample_sample_sample_114c_the_secret_life_of_mary_anne_spier_2_110_2_0_0_0.txt - 1024

sample_sample_046c_mary_anne_misses_logan_2_1324_1.txt - 1024

sample_sample_sample_sample_088c_farewell_dawn_2_1317_2_0_0.txt - 1024

sample_103c_happy_holidays_jessi_2_33.txt - 1027

sample_sample_sample_sample_009c_the_ghost_at_dawns_house_2_638_0_0_0.txt - 1024

sample_092c_mallorys_christmas_wish_2_921.txt - 1027

sample_sample_m23c_abby_and_the_secret_society_2_224_0.txt - 1024

m13c_mary_anne_and_the_library_mystery_2.txt - 3020

sample_060c_mary_annes_makeover_2_1294.txt - 1025

sample_068c_jessi_and_the_bad_babysitter_2_1034.txt - 1027

sample_m10c_stacey_and_the_mystery_money_2_411.txt - 1024

sample_sample_sample_sample_sample_sample_075c_jessis_horrible_prank_2_59_9_0_0_0_0.txt - 1024

sample_116c_abby_and_the_best_kid_ever_2_1913.txt - 1024

m12c_dawn_and_the_surfer_ghost_2.txt - 2682

031c_dawns_wicked_stepsister_2.txt - 2942

sample_sample_049c_claudia_and_the_genius_of_elm_street_2_54_0.txt - 1024

sample_061c_jessi_and_the_awful_secret_2_30.txt - 1025

sample_sample_sample_m33c_stacey_and_the_stolen_hearts_2_915_0_0.txt - 1024

sample_sample_sample_026c_claudia_and_the_sad_goodbye_2_914_0_0.txt - 1024

sample_sample_101c_claudia_kishi_middle_school_dropout_2_11_0.txt - 1024

sample_m03c_mallory_and_the_ghost_cat_2_1019.txt - 1025

sample_sample_sample_sample_sample_069c_get_well_soon_mallory_2_1364_4_0_0_0.txt - 1024

sample_sample_sample_047c_mallory_on_strike_2_194_0_0.txt - 1024

sample_sample_sample_122c_kristy_in_charge_2_999_0_0.txt - 1024

sample_sample_sample_sample_sample_118c_kristy_thomas_dog_trainer_2_117_0_0_0_0.txt - 1024

sample_077c_dwn_and_whitney_friends_forever_2_176.txt - 1025

sample_sample_m24c_mary_anne_and_the_silent_witness_2_706_0.txt - 1024

sample_sample_068c_jessi_and_the_bad_babysitter_2_1717_0.txt - 1024

sample_026c_claudia_and_the_sad_goodbye_2_914.txt - 1024

sample_sample_013c_goodbye_stacey_goodbye_2_718_0.txt - 1024

sample_sample_sample_sample_sample_sample_108c_dont_give_up_mallory_2_1769_0_0_0_0_0.txt - 1024

sample_074c_kristy_and_the_copycat_2_1026.txt - 1024

sample_066c_maid_mary_anne_2_850.txt - 1024

sample_sample_sample_sample_sample_sample_sample_sample_097c_claudia_and_the_worlds_cutest_baby_2_268_2_0_0_0_0_0_0.txt - 1024

sample_sample_056c_keep_out_claudia_2_2145_0.txt - 1024

sample_sample_m17c_dawn_and_the_halloween_mystery_2_1544_0.txt - 1024

sample_118c_kristy_thomas_dog_trainer_2_117.txt - 1024

sample_077c_dwn_and_whitney_friends_forever_2_360.txt - 1024

sample_sample_074c_kristy_and_the_copycat_2_2537_5.txt - 1024

sample_sample_099c_staceys_broken_heart_2_3000_0.txt - 1024

sample_sample_m34c_mary_anne_and_the_haunted_bookstore_2_1560_0.txt - 1024

sample_094c_stacey_mcgill_super_sitter_2_1195.txt - 1026

sample_sample_sample_sample_080c_mallory_pike_no_1_fan_2_1224_0_0_0.txt - 1024

sample_sample_sample_sample_sample_115c_jessis_big_break_2_923_0_0_0_0.txt - 1024

sample_sample_sample_sample_m07c_dawn_and_the_disappearing_dogs_2_429_0_0_0.txt - 1024

sample_sample_sample_sample_083c_stacey_vs_the_bsc_2_470_2_0_0.txt - 1024

sample_sample_098c_dawn_and_too_many_sitters_2_104_0.txt - 1024

sample_089c_kristy_and_the_dirty_diapers_2_1184.txt - 1024

sample_025c_mary_anne_and_the_search_for_tigger_2_910.txt - 1024

sample_sample_043c_staceys_emergency_2_879_0.txt - 1024

sample_sample_sample_sample_sample_m11c_claudia_and_the_mystery_at_the_museum_2_198_0_0_0_0.txt - 1024

sample_sample_sample_m08c_jessi_and_the_jewel_thieves_2_346_0_0.txt - 1024

sample_057c_dawn_saves_the_planet_2_467.txt - 1024

sample_sample_serr2c_logan_bruno_boy_babysitter_2_1371_0.txt - 1024

055c_jessis_gold_medal_2.txt - 3841

sample_109c_mary_anne_to_the_rescue_2_743.txt - 1024

sample_sample_017c_mary_annes_bad_luck_mystery_2_322_0.txt - 1024

sample_041c_mary_anne_vs_logan_2_256.txt - 1024

sample_sample_m01c_stacey_and_the_mystery_ring_2_939_1.txt - 1024

sample_sample_sample_124c_stacey_mcgill_matchmaker_2_743_0_0.txt - 1024

sample_sample_073c_mary_anne_and_miss_priss_2_233_0.txt - 1024

sample_sample_sample_sample_m04c_kristy_and_the_missing_child_2_1429_0_0_0.txt - 1024

sample_sample_sample_sample_089c_kristy_and_the_dirty_diapers_2_1184_0_0_0.txt - 1024

sample_sample_029c_mallory_and_the_mystery_diary_2_956_4.txt - 1024

sample_sample_116c_abby_and_the_best_kid_ever_2_1006_0.txt - 1024

sample_066c_maid_mary_anne_2_1884.txt - 1024

sample_sample_sample_m03c_mallory_and_the_ghost_cat_2_1019_0_0.txt - 1024

sample_sample_064c_dawns_family_feud_2_992_0.txt - 1024

sample_sample_025c_mary_anne_and_the_search_for_tigger_2_338_0.txt - 1024

sample_076c_staceys_lie_2_977.txt - 1034

sample_sample_108c_dont_give_up_mallory_2_2214_3.txt - 1024

sample_057c_dawn_saves_the_planet_2_665.txt - 1028

sample_sample_sample_004c_mary_anne_saves_the_day_2_201_11_0.txt - 1024

sample_109c_mary_anne_to_the_rescue_2_2790.txt - 1030

sample_102c_mary_anne_and_the_little_princess_2_81.txt - 1028

sample_sample_serr1c_logans_story_2_307_0.txt - 1024

sample_m30c_kristy_and_the_mystery_train_2_1076.txt - 1024

sample_sample_sample_089c_kristy_and_the_dirty_diapers_2_2168_2_0.txt - 1024

sample_sample_sample_114c_the_secret_life_of_mary_anne_spier_2_466_1_0.txt - 1024

sample_sample_053c_kristy_for_president_2_2255_2.txt - 1024